Originally published on MSDN Magazine.

Microsoft has been pushing hard at mixed reality (MR) development since it introduced HoloLens in 2014, and at the Build 2018 conference in May, it provided a wealth of great insight into new features in the Unity development environment for MR, as well as UI design best practices. In this article, I’ll leverage the technical guidance presented at Build to show how the Microsoft Fluent Design System can be used to build immersive experiences in MR—specifically, a HoloLens app that allows non-verbal children to communicate via pictograms.

Microsoft’s Fluent Design system uses three core principles to deliver amazing experiences. They are:

Adaptive: MR must bridge and mingle the real world and digital components to create a holistic experience. As such, the UI must be mindful of the environment, while enhancing (but not replacing) the user’s real-world experience.

Empathetic: Empathetic design in MR focuses on understanding the user’s intent and needs within the app experience. As an example, not all users can use their hands for gestures, so the HoloLens clicker and voice commands provide input modalities that are empathetic to the user’s needs.

Beautiful: Beautiful apps are a major challenge in MR. As a designer or developer, you must extend the real-world experience without overwhelming it. Careful planning and the right tools are needed.

Unity is the most common tool for building MR experiences. According to Unity, 90 percent of HoloLens and MR apps are built with its development environment. While Unity has its own UI system, it doesn’t easily translate to Fluent Design. The team at Microsoft has built the Mixed Reality Toolkit (bit.ly/2yKyW2r) and Mixed Reality Design Lab (bit.ly/2MskePH) tools, which help developers build excellent UXes through the configuration of components. Using these projects, developers can avoid complex graphic design work and focus on the functionality of their app. Both the Mixed Reality Toolkit and Design Lab are well documented with many code examples. In this article I use the Mixed Reality Toolkit 2017.4.0.0 Unity Package to build an interface and show you how to pull the various components together into your own cohesive, unique interface.

Before you set up your Unity environment, make sure to complete the Getting Started tutorial from the Mixed Reality Toolkit (bit.ly/2KcVvlN). For this project, I’m using the latest (as of this writing) Unity build 2018.2.08b. Using Unity 2018.2 required an update to the Mixed Reality Toolkit, but the upgrade did not cause any conflicts for the functionality implemented in this article.

Designing Your UI for MR

An excellent MR experience starts with an effective UI flow. Unlike Web or mobile apps, the UI of an MR app has one big unknown: the environment. In Web development you must be mindful of the screen resolution and browser capabilities, while in mobile development its pixel density and phone capabilities. But in MR the variable nature of the user’s physical surroundings and environment becomes paramount.

By targeting a specific environment I can tune for the physical space and for the specific capabilities of the device being used. In my example app, I’m designing for a classroom setting where students will use the app with their teacher. This is a well-lit and relatively quiet environment, which is very different from a warehouse or night club interior design. Plan your UI based on the environment.

The environment is not only external to the user but also driven by the user’s device capabilities. When thinking about my UI, I need to be aware of the possible controls to use. Thinking of my user’s environment and capabilities, speech commands will not play a key role due to lack of verbal communication. Gestures would be limited as my target users could also have fine motor skill challenges. This leads to large buttons and the users having tools like the HoloLens clicker to select the buttons.

With that in mind, I need to select how to layout my UI for a beautiful design. If you’re a Web developer with Bootstrap (or similar UI framework), you’re familiar with building to the Grid. In UWP, you have layout controls like StackPanel, Grid and Canvas. In the Mixed Reality Toolkit you have the Object Collection Component.

The Object Collection Component can be found in the Mixed Reality Toolkit under Assets | UX | Scripts | Object Collection. To use it, create an Empty game object in your scene. Name the empty game object “MainContainer” and then add the Object Collection component to the empty game object. For testing purposes, add a cube as child object to the MainContainer. Set the cube’s scale to be 0.5x, 0.2y, 0.1z so it looks like a thin rectangle. Now duplicate that cube eight times to have a total of nine cubes as child objects to the MainContainer.

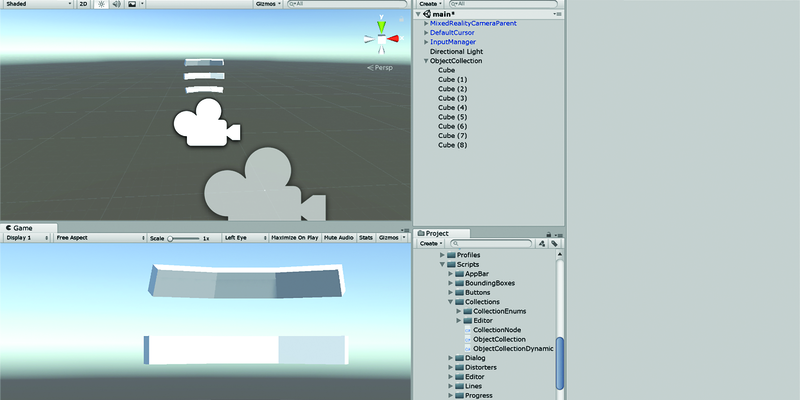

Once the cubes are added, press the Update Collection button in the Inspector to apply the Object Collection layout to the child objects. By default, the object collection uses the Surface Type of Plain. For my UI, I want the collection to wrap around the user to feel more immersive. To do this, update the Surface Type for the object collection to Sphere in the Inspector. Now all the cubes should appear as shown in Figure 1. Object collections provide multiple Surface Types to allow your UI to engage the user for your specific scenario. Later in the article I’ll show you how to use a different Surface Type to achieve a different goal.

Figure 1 Updating the Surface Type to Sphere

What Are We Building?

Non-verbal children have books of pictograms that they use with a teacher to express their needs. Each pictogram is a word or phrase that the child and teacher build together. In this article, I’ll show you how to build an MR experience to take the book into the HoloLens, so students can build a sentence to communicate with their teachers.

I’ll start by using the Object Collection to add a bunch of buttons for the user to select to build a sentence. The buttons will be the pictograms. Start by adding a Resources | Data folder into your project window. Inside the data folder create a words.json file to represent the words in the app. The sample code employs a short words file to keep things simple.

In the project window, create a Scripts folder and inside that folder create a new C# script called WordLoader. This component reads the words.json file and converts it into a collection of C# Word objects. In the Design Lab there are example projects that illustrate the finished sample of reading data from a file and displaying it in an MR experience. I’m adapting the code from the Periodic Table project to keep this example concise and familiar, as shown in Figure 2. Please check out the Period Table project at bit.ly/2KmSizg for other features and capabilities built on top of reading a data file and binding the results to an object collection.

[System.Serializable] class Word { public string category; public string text; public string image; } [System.Serializable] class WordsData { public Word[] words; public static WordsData FromJSON(string data) { return JsonUtility.FromJson<WordsData>(data); } }

Next, write the code shown in Figure 3 for the WordLoader component. This component loads the words from the JSON file and adds them as buttons to the Object Collection. There are two public variables in the component: Parent is the Object Collection that holds the words and WordPrefab is the prefab used to represent the words in the MR experience.

public class WordLoader : MonoBehaviour { public ObjectCollection Parent; public GameObject WordPrefab; private void OnEnable() { if(Parent.transform.childCount > 0) return; TextAsset dataAsset = Resources.Load<TextAsset>("Data/words"); WordsData wordData = WordsData.FromJSON(dataAsset.text); foreach (Word w in wordData.words) { GameObject newWord = Instantiate<GameObject>(WordPrefab, Parent.transform); newWord.GetComponent<CompoundButtonText>().Text = w.text; newWord.GetComponent<CompoundButtonIcon>().OverrideIcon = true; string iconPath = string.Format("Icons/{0}", w.image); newWord.GetComponent<CompoundButtonIcon>().iconOverride = (Texture2D)Resources.Load<Texture2D>(iconPath); } Parent.UpdateCollection(); } }

Once all the words are loaded to the Parent, calling Parent.UpdateCollection will arrange the newly created buttons based on the layout of the Object Collection component. Each time you change a property in the Object Collection component, call Update Collection to ensure that all game objects are updated properly.

Now in the Unity Editor you create an empty game object called Managers, which holds all the utility components for the scene. Add the WordLoader component to Managers, then set Parent to MainContainer. Finally, set WordPrefab to use the Holographic Button prefab (found in HoloToolkit | UX | Prefabs | Buttons). These buttons implement Fluent Design concepts like using light to reveal focus and action. When the user sees the button light up, he knows what’s selected.

Running the app now creates buttons for each word in the word data file (see Figure 4). The Holographic buttons will surround the user in a sphere. While in Play Mode, feel free to update the Object Collection’s Surface Type to experiment with other layouts. Adjust the cell spacing and row counts to find the right mix for your experience. Remember to Update Collection after each change to see the change in the editor.

Figure 4 Viewing the Words as Holographic Buttons in the Object Collection

Keeping up with the User

Once the Object Collection is built it’s set to specific coordinates in the world. As the user moves, the Object Collection will stay where it was originally created. For a classroom this doesn’t work because children don’t always stay at their desk. I need to update the UI to stick with the user as they move around the real world. In Fluent Design you need to be adaptive to the environment, even when that person moves and changes the surroundings.

At the Build 2018 conference, a technical session (“Building Applications at Warehouse Scale for HoloLens, bit.ly/2yNmwXt) illustrated the challenges with building apps for spaces larger than a table top. These range from keeping UI components visible when blocked by objects like a forklift in a warehouse to keeping the UI accessible as the user moves around a space for projects like interior design. The Mixed Reality Toolkit gives you some tools to solve some of the issues by using the Solver System.

The Solver System allows game objects to adjust their size or position based on the user’s movement through the world. In Unity, you can see how some Solvers work in the scene using the editor. To add a Solver, select the MainContainer object and add the SolverBodyLock component (found in HoloToolkit | Utilities | Scripts | Solvers). The SolverBodyLock component allows the sphere of buttons to stay with the user, because as the user moves throughout the space the MainContainer moves with them. To test this, run your app in the editor and move around the world space using the arrow or WASD keys. You’ll notice forward, backward and side-to-side movements keep the MainContainer with you. If you rotate the camera, the MainContainer doesn’t follow you.

To make the UI follow the user as he rotates in space, use the SolverRadialView, which by default keeps the object just inside the user’s peripheral view. How much of the object is visible can increase or decrease based on the component’s settings. In this use case, I don’t want the UI chasing the user as he turns away, so the SolverBodyLock is enough. It keeps the list of buttons with the user, but not always in their face.

Maintaining Size

In my app I expect people to move through a classroom setting. In MR, just like the real world, when people move away from an object, that object appears smaller. This creates problems: Using Gaze to target UI components that are on distant objects can be difficult, and icons can be difficult to discern from far away. The ConstantViewSize Solver addresses this by scaling the UI component up or down based on the user’s distance.

For this sample app you’re going to add a component that lets users build sentences. Each button pressed in the MainContainer adds to the sentence. For example, if the user wants to say, “I am hungry,” he would click the “I” button, then “am” button, then “hungry” button. Each click adds a word to the sentence container.

Create a new empty game object in the Hierarchy and call it Sentence. Add the Object Collection component to Sentence, set the Surface Type to Plane and Rows to 1. This creates a flat interface that won’t break into rows for content. For now, add a cube to the Sentence game object, so that you can see the constant size Solver in action. Later I’ll add the words from the buttons. Set the cube’s scale to x = 1.4, y = 0.15, z = 0.1. If you start the app now and move through the space, the white cube will shrink and grow as you move farther away and closer to it.

To lock the cuboid’s visual size, add the SolverConstantViewSize component to the Sentence object. The scale for the component can also be constrained using the minimum and maximum scale range settings, so you can control how large you want the UI component to grow or shrink and at what distances—this lets you scale components from a table top, to a classroom, to a warehouse.

There are more solvers available in the Mixed Reality Toolkit, including Momentumizer and Surface Magnetism. I encourage you to check out the Solver System and grow your own MR experience with a UI that can work with the user in the real-world space (bit.ly/2txcl4c).

The Sentence and the Receiver

To enable the buttons with the MainContainer to connect with the Sentence object, I implement a Receiver that receives events from the buttons pressed in the MainContainer and adds the content of that button to the Sentence. Using the Interactable Object and Receiver pattern built into the Mixed Reality Toolkit, connecting the prefab buttons found in the HoloToolkit | UX | Buttons folder is simple.

Create a new C# script called Receiver in the Scripts folder of your project, as shown in Figure 5. Change the script to inherit from MonoBehavior to InteractionReceiver. Create a public variable to hold a reference to the Sentence game object and then implement the override method for InputDown to respond to a user’s click action on the Holographic buttons.

public class Receiver : InteractionReceiver { public ObjectCollection Sentence; protected override void InputDown(GameObject obj, InputEventData eventData) { switch (obj.name) { default: GameObject newObj = Instantiate(obj, Sentence.gameObject.transform); newObj.name = "Say" + obj.name; Sentence.UpdateCollection(); break; } }

Inside of the InputDown method, create a switch statement that checks the name property of the obj game object. For now, create the default condition, which occurs no matter what the game object name is. Within the default statement, I take the calling object and create a new instance of it under the Sentence game object. This creates a new instance of the button in the Sentence object collection without registering it with the Receiver. If you press the button in the Sentence object, nothing happens. Before testing, remove the white cuboid placeholder so you can see the buttons appearing on the Object Collection plane.

To connect the buttons in the MainContainer to the Receiver, you need a few lines of code added to the WordLoader. As the app loads words, each will be registered as an Interactable for the Receiver. Interactables are the list of game objects using the InteractableObject component that the Receiver reacts to when an event occurs. Within the WordLoader onEnable method, add the following code directly under the childCount check:

var receiver = GetComponent<Receiver>(); if (receiver == null) return;

This code checks to ensure that a receiver is present. Then in the foreach loop for the words, add the following code after loading the icon for the button:

receiver.Registerinteractable(newWord);

Registerinteractable adds the game object to the list of interactables for the Receiver. Now clicking on the Holographic buttons in the MainContainer triggers the Receiver to perform the InputDown method and creates a copy of the button that was clicked.

With some minor reconfiguring of the MainContainer Object Collection cell size and placement, you’ll see something like the image shown in Figure 6.

Figure 6 MainContainer and Sentence Object Collections After Update

While I’m using the Holographic buttons in this example, the interactable component can be applied to anything from a custom mesh to text. Let your creativity help you imagine amazing interfaces. Just remember that one of the goals of MR is to merge the digital and physical worlds so that they work together.

Leveraging Text to Speech

Part of Fluent Design is being empathetic to your users’ needs. In this example, you’re enabling those lacking verbal speech to express themselves. Now you need to use Text to Speech to say out loud what the user has built in her sentence. The HoloToolkit provides an excellent component to do this with minimal code or configuration. If you’re not using a HoloLens, check out my MSDN Magazine article, “Using Cognitive Services and Mixed Reality” (msdn.com/magazine/mt814418), to see how to use Text to Speech through Azure Cognitive Services.

To start using Text to Speech, add an Audio Source to your project Hierarchy. This is used by the Text to Speech component to generate audio for users to hear. Now add the Text to Speech component (found in HoloToolkit | Utilities | TextToSpeech) to the Managers game object. I use the Managers game object as the home for all utility methods (Receiver, Text to Speech) to centralize events and what happens with those events. In this case the Receiver will use the Text to Speech component to say the sentence using the same Receiver setup you have for adding words to the sentence. Set up the Text to Speech component to use the Audio Source you just created by selecting it in the Inspector.

In the Receiver, I’m going to detect if any words exist in the Sentence, and if so, I’ll add a button that will say the Sentence. Add a new game object public variable called SayButton to the Receiver component.

public GameObject SayButton;

This will be the prefab used as the Say button. Within the switch statement’s default block add the following if statement prior to adding the new object to the Sentence:

if (Sentence.transform.childCount == 0) { GameObject newSayButton = Instantiate(SayButton, Sentence.transform); newSayButton.name = "Say"; newSayButton.GetComponent<CompoundButtonText>().Text = "Say: "; GetComponent<Receiver>().Registerinteractable(newSayButton); }

In this case when there aren’t any children in the Sentence object, add the Say button prior to any other buttons. This will keep Say to the far left of the Sentence object for any sentence. Next, create a new case statement in the switch to detect the Say button by name. Remember, the switch is based on obj.name and when you created this button you set the name to “Say.” Here’s the code:

case "Say": StringBuilder sentenceText = new StringBuilder(); foreach (CompoundButtonText text in Sentence.GetComponentsInChildren<CompoundButtonText>()) { sentenceText.Append(text.Text + " "); } TextToSpeech tts = GetComponent<TextToSpeech>(); tts.StartSpeaking(sentenceText.ToString()); break;

This case detects the Say button by name and then loops through all the children of Sentence to build a string of words for the TextToSpeech component to say as a sentence. When the user is done building a statement, he can press the Say button to express his statement. For simplicity’s sake I did not have you implement the Text to Speech Manager of the HoloToolkit (bit.ly/2lxJpoq), but it’s helpful to avoid situations like a user pressing Say over and over again, resulting in the Text to Speech component trying to say multiple things at the same time.

Wrapping Up

Using Fluent Design with MR is a natural fit. Tools like the Mixed Reality Toolkit and Mixed Reality Design Labs are excellent springboards to build an experience that’s adaptive, empathetic and beautiful. In this article I covered how to implement some of the experiences shown at Build 2018 for MR. By using Object Collections as a layout tool, Solver Systems to keep the UI with the user as they move through space, and then connecting the experience with a Receiver for centralized event management, I showed you how to quickly build an MR app.

Using the examples from the Mixed Reality Toolkit, you can take this sample app further with new interface options and capabilities. Review the MR sessions from Build 2018 to get further inspiration on how to build exceptional experiences that blend the physical and digital worlds.

Tim Kulp is the director of Emerging Technology at Mind Over Machines in Baltimore, Md. He’s a mixed reality, artificial intelligence and cloud app developer, as well as author, painter, dad, and “wannabe mad scientist maker.” Find him on Twitter: @tim_kulp or via LinkedIn: linkedin.com/in/timkulp.